Published: Oct 10, 2024

Governance for Generative AI

Introduction

Generative AI (GenAI) has emerged as a groundbreaking technology with immense potential to revolutionise various aspects of our society. From enabling digital transformation in both large corporations and small organisations, to enhancing creativity and productivity, GenAI, such as ChatGPT, has opened new avenues for innovation and progress. However, while we celebrate the possibilities offered by this technology, it is crucial to acknowledge that its unrestricted use can give rise to significant risks and unintended consequences.

In the context of unrestricted use GenAI, we delve into the multifaceted nature of GenAI, particularly ChatGPT, and the need for an appropriate governance framework to ensure responsible and beneficial deployment. We explore the inherent risks associated with the unbridled use of ChatGPT and shed light on potential challenges that may arise if adequate precautions are not taken.

Recognising the importance of a balance between harnessing the power of GenAI and safeguarding against its potential pitfalls, we aim to equip individuals, organisations, and policymakers with a comprehensive understanding of the risks involved. Moreover, we provide a set of general guidelines that can serve as a starting point for mitigating these risks to ensure the responsible and ethical use of ChatGPT.

Through the establishment of effective governance measures, we can foster an environment where ChatGPT and similar technologies can thrive, to the benefit of society.

The rapid growth and use of GenAI in many applications is due to its potential benefits in multiple dimensions:

- Increased productivity: GenAI can help people to be more productive by automating tasks that are time-consuming or repetitive. For example, GenAI can be used to automate email responses for simple enquiries, schedule appointments, and summarise interactions.

- Enhanced creativity: GenAI can help people to be more creative by providing them with innovative ideas and inspiration. For example, it can be used to generate new product designs, musical compositions, and works of art.

- There is a trade-off between productivity and safety. Implementing safeguards to prevent the unethical use of ChatGPT can reduce the tool’s productivity. For example, if ChatGPT is trained to identify and avoid generating harmful content, it may also generate less creative or less interesting content.

- Personalised experiences: GenAI can be used to create personalised experiences for users. For example, it can be used to recommend products to customers, generate tailored news feeds, and create custom educational content.

However, the use of GenAI can be a double-edged sword as the technology also poses several potential risks[IMDA23a, Gartner23]:

- Model explainability and interpretability: GenAI models can be very complex and difficult to understand. This can make it difficult to determine how they are making decisions and to identify potential biases or errors.

- Data quality and bias: GenAI models are trained on large datasets of existing content. If these datasets are biased, the models will also be biased. This can lead to the generation of content that is biased and discriminatory.

- Safety and security: GenAI models can be used to create malicious content, such as deepfakes or spam. It is important to develop safeguards to prevent the misuse of Generative AI models.

- Copyright infringement: GenAI models can be used to create works that are substantially similar to existing copyrighted works. If the model has been trained on copyrighted data, without permission, then the use of the model to create new works could be considered copyright infringement.

- Legal and ethical implications: The use of GenAI raises a number of legal and ethical issues, such as copyright, privacy, and liability. It is important to develop clear guidelines for the responsible use of GenAI.

- Economic and social impact: The widespread use of GenAI could have a significant impact on the economy and society. For example, it could be used to automate tasks that are currently performed by humans, which could lead to job losses. Additionally, GenAI could be used to create new products and services that could have a disruptive impact on existing industries.

In addition to these challenges, it is also important to be aware of the potential for GenAI to be used for malicious purposes. For example, it could be used to create fake news articles, social media posts, or even videos. This content could be used to spread misinformation and disinformation, which could have a negative impact on society.

Arising challenges

How do we strike a balance between enhanced productivity and the unethical use of ChatGPT? There are a few reasons why such a balancing act is challenging:

- ChatGPT is a powerful tool that can be used for both good and bad. On the one hand, ChatGPT can be used to automate tasks, generate creative content, and improve communication. On the other hand, it can also be used to generate harmful or misleading content, such as fake news or propaganda.

- It can be difficult to distinguish between ethical and unethical use. In some cases, it may be clear that ChatGPT is being used unethically. For example, if someone is using ChatGPT to generate fake news, it is clear that they are using the tool for malicious purposes. However, in other cases, it may be more difficult to determine whether ChatGPT is being used unethically. For example, if someone is using ChatGPT to generate creative content, it may be difficult to determine whether the content is harmful or misleading.

- There is a trade-off between productivity and safety. Implementing safeguards to prevent the unethical use of ChatGPT can reduce the tool’s productivity. For example, if ChatGPT is trained to identify and avoid generating harmful content, it may also generate less creative or less interesting content.

- It is difficult to regulate the use of innovative technologies. As new technologies emerge, it can be difficult to develop effective regulations to govern their use. This is especially true for technologies like ChatGPT, which are constantly evolving.

As a result of these challenges, it is non-trivial to strike a balance between reaping productivity gains and the unrestricted or unethical use of ChatGPT. More work needs to be done to mitigate the risks and ensure that the tool is used in a responsible manner.

Does OpenAI have the legal right to use the personal information of individuals to train ChatGPT?

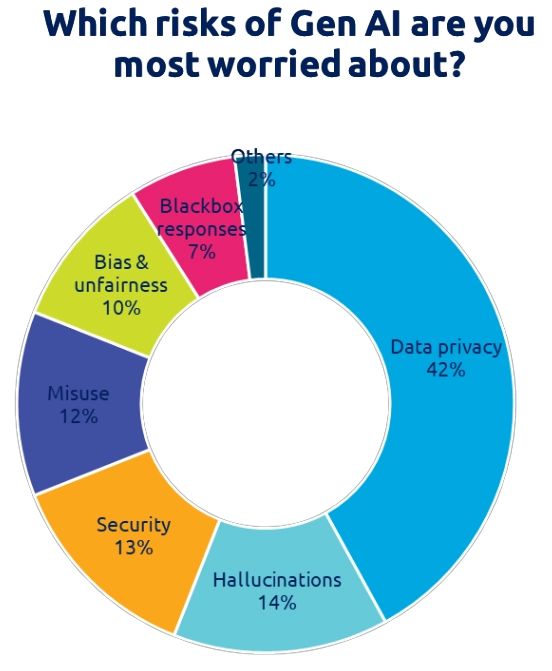

According to Gartner, data privacy is the biggest concern based on a survey of 713 IT Executives. This is summarised in Figure 1 below.

Figure 1. Risks associated with Generative AI according to 713 IT Executives (source: Gartner, Aug 2023)

In general, OpenAI is likely to have the legal right to use personal information to train ChatGPT if the data is collected and used in compliance with applicable laws and if users have consented to this use. If OpenAI collects personal information from users who have agreed to its terms of service, which state that the data may be used to train ChatGPT, then OpenAI is likely to have the legal right to use that data for this purpose.

However, there are some potential legal limitations on OpenAI’s use of personal information to train ChatGPT. Some jurisdictions have laws that restrict the collection and use of certain types of personal information, such as sensitive personal data or data pertaining to children.

Additionally, some jurisdictions have laws that give individuals the right to opt out of having their personal data used for certain purposes, such as for training AI models.

For example, ChatGPT was banned by the Italian data protection authority at the start of April 2023 over privacy concerns. The Italian data protection authority, also known as Garante, temporarily restricted the chatbot and launched a probe over the artificial intelligence application’s suspected breach. As Garante had accused OpenAI of failing to check the age of ChatGPT’s users (who are supposed to be aged thirteen or above), OpenAI said it would offer a tool to verify users’ ages in Italy upon sign-up. OpenAI explained that it would also provide a new form for European Union users to exercise their right to object to its use of their personal data to train its models. Access to the ChatGPT chatbot has since been restored in Italy [McCallum23].

Responsible AI through governance

Different jurisdictions may adopt different policies and approaches

The Japanese Ministry of Education, Culture, Sports, Science and Technology (MEXT) published guidelines in July 2023, allowing limited use of Generative AI (GenAI) in elementary, junior high, and high schools. The guidelines are intended to help teachers and students understand the characteristics of the technology, while imposing some limits due to fear of copyright infringement, personal information leaks, and plagiarism[Kyodo23].

The guidelines state that Generative AI can be used for educational purposes, such as:

- Generating creative text formats of text content, like poems, code, scripts, musical pieces, email, letters, etc.

- Translating languages

- Writing various kinds of creative content

- Answering questions in an informative way

- Helping with research and problem-solving

However, the guidelines also caution that Generative AI should not be used for the following purposes:

- Plagiarism: Passing off AI-assisted schoolwork as one’s own will be deemed cheating.

- Copyright infringement: Using Generative AI to create content that infringes on the copyright of others is prohibited.

- Writing various kinds of creative content.

- Personal information leaks: Using Generative AI to generate content that contains personal information about others is prohibited.

- Hate speech and misinformation: Using Generative AI to generate content that is hateful, misleading, or discriminatory is prohibited.

The guidelines also state that elementary school students younger than age 13 can use AI, to a certain extent, under the guidance of teachers.

The MEXT’s decision to allow limited use of Generative AI in schools was a significant step, as Japan became one of the first countries in the world with such a government policy. The guidelines are still in the initial stages, and it remains to be seen how they will be implemented in practice. However, the move is a sign that Japan is recognising the potential of Generative AI to transform education.

On the other hand, the Australian Federal Government opened an inquiry last year into the use of Generative AI within its education system [Parliament23]. The Australian Federal Government’s inquiry into the use of Generative AI in education is a significant development, as it was one of the first governments in the world to take such a step.

The inquiry will examine the following key areas:

- The potential benefits and risks of using Generative AI in education

- The ethical implications of using Generative AI in education

- The best ways to use Generative AI to improve education outcomes for all students

Schools in Australia have now officially rolled out the use of artificial intelligence bot ChatGPT. The decision to allow ChatGPT in schools was made after a consultation with teachers, students, parents, and education experts. The consultation found that there is a strong demand for the use of ChatGPT in schools, and that the potential benefits of using this technology outweigh the risks [Cassidy23, Beaumont24].

The Australian government is working with OpenAI to develop guidelines for the responsible and ethical use of ChatGPT in schools. The guidelines will cover areas such as:

- Data privacy and security

- Copyright and intellectual property

- Plagiarism and academic integrity

- Bias and discrimination

Where to start?

To address the ethical and societal concerns surrounding AI, including Generative AI, it is essential to develop and operationalise an effective governance framework within an organisation. These frameworks should be designed to ensure that AI is used in a responsible and ethical manner.

Some of the overarching principles of effective AI governance include:

- Transparency: AI systems should be designed in a way that is transparent and accountable. This means that it should be possible to understand how these systems work and to identify the biases that they may contain.

- Fairness: AI systems should be designed in a way that is fair and does not discriminate against any particular group of people. AI systems should be trained on datasets that are representative of the population, and they should be used in a way that does not perpetuate existing biases.

- Accountability: There should be clear lines of accountability for the development and use of AI systems, and it should be clear who is responsible for ensuring that these systems are used in a responsible and ethical manner.

- Safety: AI systems should be designed in a way that is safe and does not pose a risk to human safety or security. AI systems should be tested for potential vulnerabilities, and they should be equipped with safeguards to prevent misuse.

- Privacy: AI systems should respect user privacy. They should not collect or use any personal data without the user’s consent.

Once the overarching AI principles have been defined, a governance framework that sets out how these principles will be put into practice needs to be developed. This framework should include policies and procedures for the development, deployment, monitoring, and evaluation of AI systems.

Here are some examples of key elements of an AI governance framework:

- AI ethics committee: An AI ethics committee should be established to review all AI projects and ensure that they are aligned with the organisation’s AI principles.

- AI risk assessment: All AI projects should be subjected to a risk assessment to identify and mitigate any potential risks.

- AI monitoring: AI systems should be monitored to ensure that they are performing as expected and that they are not having any unintended negative consequences.

- AI impact assessment: AI systems should be regularly assessed to measure their impact on individuals, society, and the environment.

The US Department of Commerce National Institute of Standards and Technology NIST AI Risk Management Framework [NIST24] and Singapore’s Infocomm Media Development Authority’s Model AI Governance Framework [IMDA24] provide useful guidelines and further details on how an effective governance framework might look.

AI risk and impact assessments should be conducted regularly to identify and mitigate any potential risks. These assessments should consider the following factors:

- The type of AI system: Some AI systems are riskier than others. For example, AI systems that are used to make critical decisions, such as for medical diagnoses or legal decision-making, pose higher risks than AI systems that are used for entertainment or marketing purposes.

- The data used to train the AI system: AI systems are trained on data and the quality and bias of the data can have a significant impact on the performance of the system. It is important to assess the risk of bias in the data used to train AI systems.

- The potential impact of the AI system: AI systems can have a positive or negative impact on individuals, society, and the environment. It is important to assess the potential impact of AI systems before they are deployed.

By conducting regular AI risk and impact assessments, organisations can ensure that AI systems are used in a safe and responsible manner.

Summary

GenAI can be used to create a wide variety of content, including text, code, images, and videos. This content can be used for good or malicious purposes, so it is important to have safeguards in place to prevent GenAI from being used maliciously.

Some recommended practices that organisations should consider when adopting a responsible approach to AI, in particular with the rise of GenAI and ChatGPT, are as follows:

- Approve only a certain set of use cases: Decide which use cases for ChatGPT are acceptable and which are not. For example, an organisation might allow ChatGPT to be used to generate personalised learning materials for students, but not to create fake news articles or deepfakes.

- Avoid usage of certain input data: ChatGPT is trained on a massive dataset of text and code which could contain personal information, confidential data, or biased content. It is important to avoid using ChatGPT to process any type of data that is sensitive or that could be used to harm or discriminate against individuals or groups.

- Verify the accuracy of output from ChatGPT: ChatGPT is a powerful language model, but it is not perfect. It is important to verify the accuracy of any output from ChatGPT before using it. This is especially important for high-stakes applications, such as medical diagnosis or legal decision-making.

- Consider legal issues: When using ChatGPT, it is important to be aware of the relevant laws and regulations. It is critical to ensure that no privacy laws are violated during the data collection process and that no copyrights are infringed through the generation of content.

In addition to these recommendations, it is also important to be transparent about how ChatGPT is used. Organisations should inform users about what data is being collected, how it is being used, and what they can expect from the output of the model. It is also important to have a process in place for users to report any problems or concerns they have about the use of ChatGPT.

By taking these steps, we will go a long way in ensuring that ChatGPT is used in a responsible and ethical way.

References

[IMDA23a] Generative AI: Implications for Trust and Governance.

The Infocomm Media Development Authority (IMDA) and Aicadium, 7 Jun 2023.

[Gartner23] Gartner Experts Answer the Top Generative AI Questions for Your Enterprise. https://www.gartner.com/en/topics/generative-ai. Accessed on 24 Oct 2023.

[McCallum23] S. McCallum. ChatGPT accessible again in Italy. BBC News, https://www.bbc.com/news/technology-65431914. 28 Apr 2023.

[Kyodo23] Japan publishes guidelines allowing limited use of AI in schools. Kyodo News, https://english.kyodonews.net/news/2023/07/ac1ce46ce503-japan- publishes-guidelines-allowing-limited-use-of-ai-in-schools.html. 4 Jul 2023.

[Parliament23] Inquiry into the use of generative artificial intelligence in the Australian education system. Parliament of Australia, https://www.aph.gov.au/Parliamentary_Business/Committees/House/Employment_ Education_and_Training/AIineducation. Accessed on 25 Oct 2023.

[Cassidy23] C. Cassidy. Artificial intelligence such as ChatGPT to be allowed in Australian schools from 2024.

The Guardian, https://www.theguardian.com/australia-news/2023/oct/06/chatgpt-ai-allowed-australian- schools-2024. 6 Oct 2023.

[Beaumont24] D. Beaumont, A New Era of Technology: ChatGPT within Australian Schools.

The City Journal, https://thecityjournal.net/news/a-new-era-of-technology-chatgpt-within-australian-schools/. 24 May 2024.

[NIST24] NIST AI Risk Management Framework: Generative Artificial Intelligence Profile, NIST AI 600-1, https://doi.org/10.6028/NIST.AI.600-1. Jul 2024.

[IMDA24] IMDA Model AI Governance Framework, https://aiverifyfoundation.sg/wp-content/uploads/2024/05/ Model-AI-Governance-Framework-for-Generative-AI-May-2024-1-1.pdf. 30 May 2024.